My research is on interactive machine learning (ML), where learning agents actively engage in data collection to make decisions or gain useful insights. Such interactive nature of learning processes enables learning agents to focus on collecting data from the relevant parts of the environment, thus allowing to save data collection effort (which oftentimes translates to onerous human labor or experimental cost). My reserach goal is to understand and establish principled ways to design interactive machine learning algorithms with efficiency guarantees (e.g. sample efficiency, computational efficiency), and evaluate them experimentally in simulated and real-world datasets and environments.

Acknowledgments

We are grateful to funding support from University of Arizona Technology and Research Initiative Fund (TRIF), and National Science Foundation IIS-2440266 (CAREER: Foundations of Interactive Machine Learning with Rich Feedback).

Below are some research topics I have been working on:

Imitation Learning

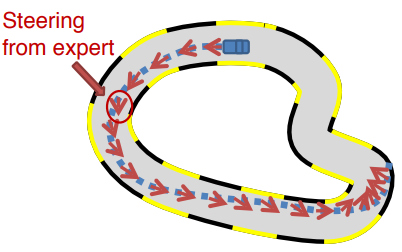

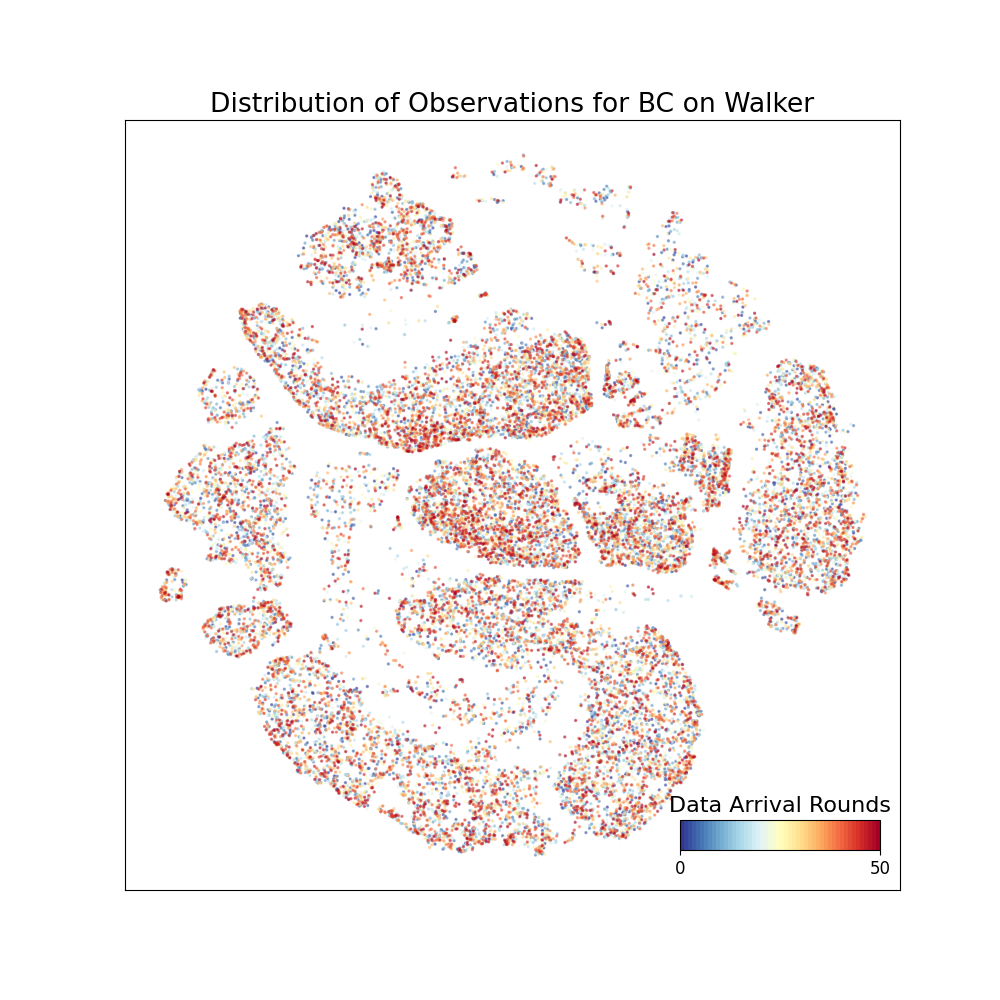

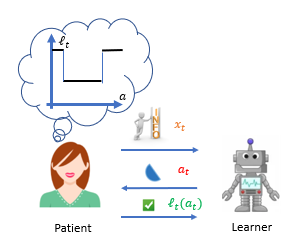

Imitation learning (IL), or Learning from Demonstrations, models the setting where a learning agent learns from a demonstrating expert to obtain intelligent sequential decision making behavior. Compared with reinforcement learning, imitation learning has the advantages that: (1) it gets around the reward misspecification problem and (2) it can mitigate the challenge of exploration. Such paradigm has been successfully deployed in e.g. robotics and autonomous driving. My research in imitation learning has been focused on understanding the power of interactive expert demonstrations: if we have an expert that can provide real-time, interactive action demonstration (cf. offline IL with expert demonstration trajectories readily available), how can we best utilize it and save its effort?

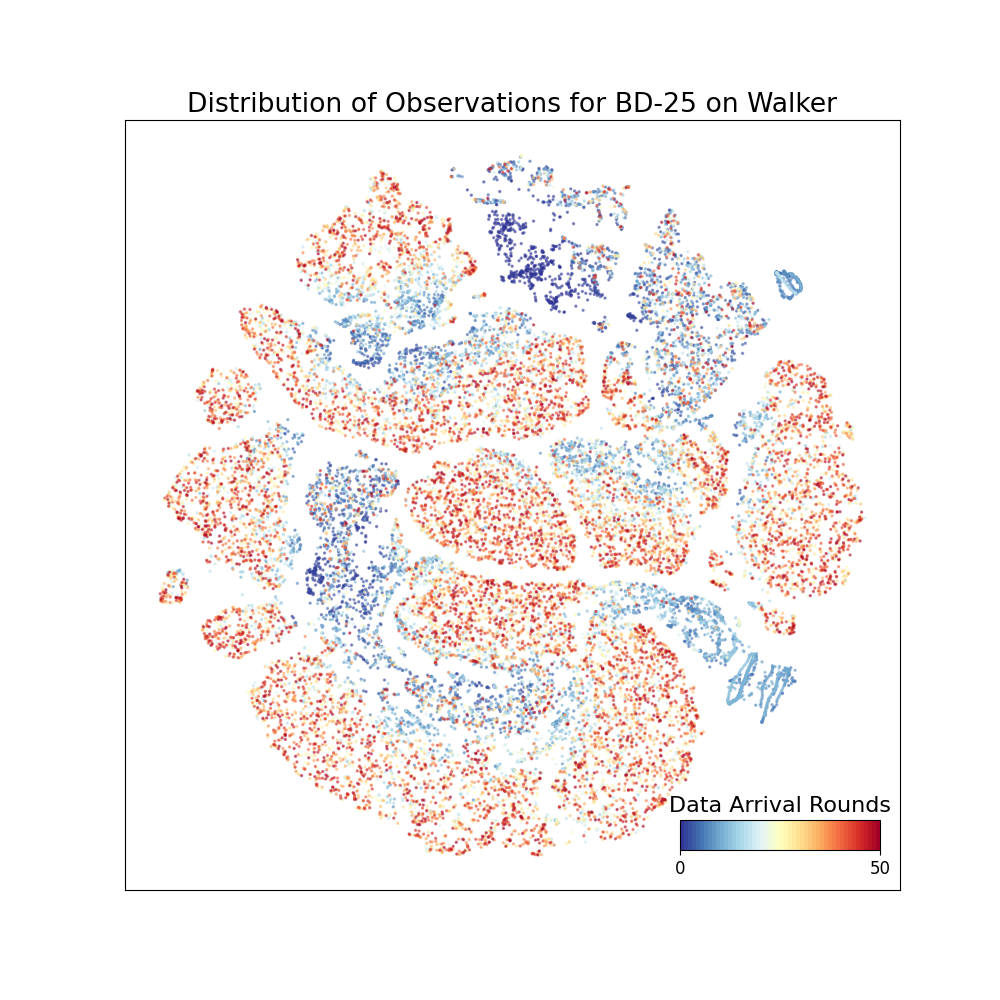

Left: in interactive IL, experts can provide demonstrations retroactively based on learner’s policy rollout (Picture Credit: Stéphane Ross). Middle and Right: t-SNE Visualization of states seen by offline IL and interactive IL, in an simulated control environment.

Exploration in Bandits and Reinforcement Learning

A wide range of sequential decision making problems require learning agents to perform exploration to learn about relevant parts of the environment. E.g., a robot needs to traverse a part of the maze before it finds way to its goal; a product recommendation system might want to gather information about a user’s interest by trying to suggest users products they may like and see their reactions. My research in this area has been on understanding how to perform efficient exploration in structured environments, e.g., in the presence of large action spaces, multi-task settings, structured reward distributions, and environments with sparsity and low-rank properties.

Active Learning

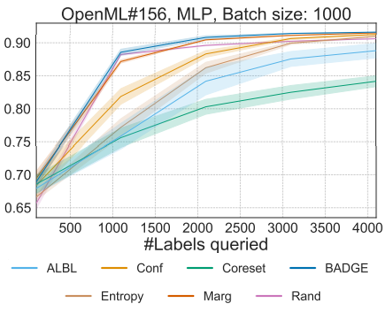

Although in many applications of ML, unlabeled examples are abundant and easy to obtain, obtaining label annotations can be very time- and labor-consuming. Active learning aims at using interaction to reduce label annotation efforts: by adaptively making label queries to experts, the learning agent can avoid making queries on examples whose labels they are confident about and thus focus on querying informative examples. My research aims at designing principled approaches for active learning with label requirements approaching fundamental information-theoretic limits, ensuring computational efficiency and noise tolerance, as well as practical heuristics. I am also interested in the interplay of active learning with other fields, such as uncertainty quantification, fairness estimation, and game theory.

Multi-task and Transfer Learning

Real-world learning agents are not just trained and tested on one task; they usually learn and act in many different tasks at the same time, borrowing the insights they learn from one task for better learning in other ones. This is especially relevant in the modern foundation model era, where the ``pretrain-then-finetune’’ paradigm becomes standard. My research tries to quantify when and how one can utilize auxiliary source of data to provably benefit learning and decision making, tailored to settings such as active learning, contextual bandits, multi-task and meta-learning.

Interdisciplinary Collaborations

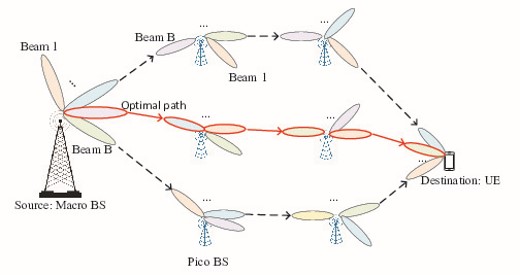

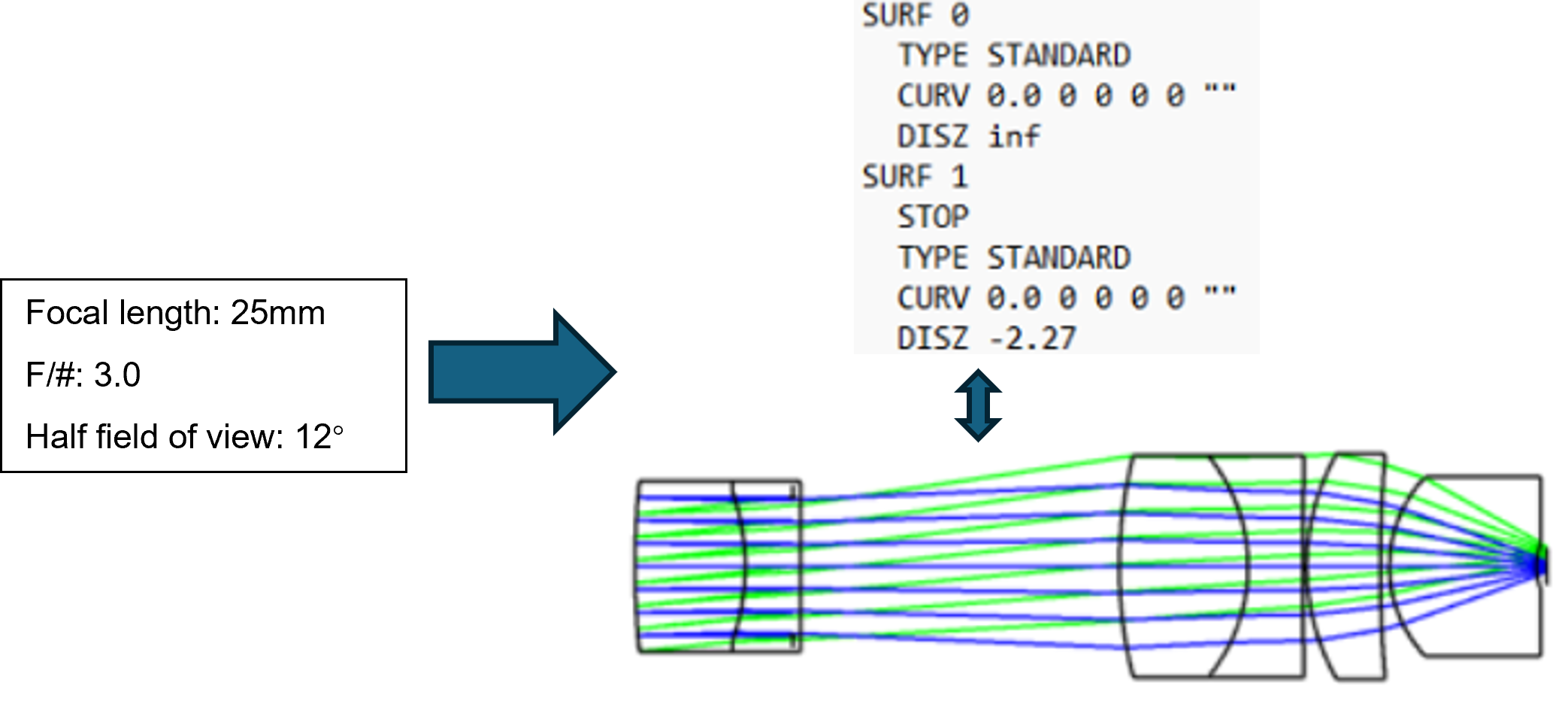

Besides machine learning methodology research, I am also interested in working with domain experts on tailoring general methods to practical applications. This includes the efforts of: developing large-action-space bandit algorithms for beam and path selection in wireless communication; designing fairness-aware bandit algorithms for network coexistence; interpretable classification for oracle cancer detection, and lens design starting point generation using LLMs.